Introduction to sparse matrices — Part 1

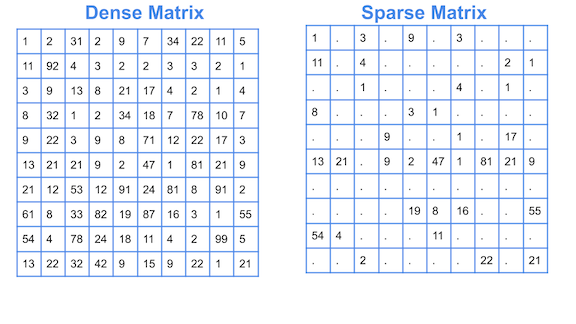

Any matrix which comprises mostly zero values can be termed as a sparse matrix. Roughly, if more than two-thirds of the data points are zero, it is safe to assume that it can be stored as a sparse matrix though there is no strict definite proportion. A dense matrix is the opposite with mostly non-zero values. A sparse matrix can always be stored as a dense matrix too.

A matrix is sparse if many of its coefficients are zero. The interest in sparsity arises because its exploitation can lead to enormous computational savings and because many large matrix problems that occur in practice are sparse.

~ Page 1 Direct Methods for Sparse Matrices, Second Edition 2017

Sparsity: This is the ratio of the total number of zero elements divided by the total number of elements in a matrix.

Sparsity (s) = no of zeros / total number of elements in the matrix

Why sparse matrices?

Dense matrices can be very computationally heavy and exhaustive, both in terms of space and time complexity.

Space complexity

Any matrix which has a lot of features but the data is mostly not present for most of the features, that is, zero.

For example, the matrix for user ratings for the products on an e-commerce website or ratings of movies by different users on IMDB or Netflix. Most of the users do not provide a rating and that’s why most of the elements are zero in such data.

We know that very few people actually provide ratings and thousands of people might have bought it in the past making them the users. So, most of the elements will be zero. If we store this matrix as dense, we’ll be using 32 or 64-bit zero values making it really big in memory size.

Time complexity

Let’s say we want to run a basic collaborative filtering algorithm on the matrix that we created above. Imagine running it on 100,000 users by 10000 product matrix where most of the values are zero. For something as simple as subtracting the avg rating of the user from each product to remove bias (one of the steps performed in collaborative filtering), the compiler will have to go through the entire matrix making the entire process slow. This problem increases as the size of the matrix increases.

Applications of Sparse matrices

In Machine learning, there are a bulk of operations that are performed in a series. In a case where we have a big matrix, converting it into a sparse matrix always helps. A sparse matrix is stored in a different format than a dense matrix. When we store a dense matrix as a sparse matrix, the program only stores the location of non-zero values, making it smaller in memory size and much faster for any computations.

-Recommender systems use sparse matrices for storing its matrices (user-user, user-item, and item-item)

-NLP user sparse matrices for tf-idf matrix, storing frequency of a word in a document and its comparison with different lexicons

-Dummy variables for a class with, let’s say 5000 levels, can be stored as a sparse matrix making it more usable to run with regression and other models

Using sparse matrices in Python

Python offers wide support for sparse matrices. One of the most widely used libraries is Scipy.sparse as it offers many default functions for data exploration.

Creating a sparse matrix:

Converting a dense matrix to sparse

Using sparse matrix with Pandas

Most of the time we have data in a data frame and when we have to perform some operations over it, there might be a lot of dummy variables. In my research work, I had a matrix of almost 6600 dummy variables and 1.4 Million rows with almost 90% of the cells being zeros due to dummy variables. I could not perform regression as I was running into the memory overload issue. As the article suggested above, in all such cases, it is advisable to convert the dense matrix to sparse. So, best way is to convert your data frame to a sparse format while creating dummies.

We can see in the above piece of code how we created dummy variables for one column and stored it as a sparse matrix at the same time.

Using sparse matrices in multiple linear regression is one of the most important things and we will learn about that in the second part of this series.